Neural networks - The foundation for modern Artificial Intelligence (AI)

Historically, the pursuit of artificial intelligence has drawn its most profound insights from the intricate systems of biology. The neuron—the brain's fundamental computational unit—provided the conceptual blueprint that shaped the trajectory of modern AI architectures and learning paradigms.

This biological inspiration was first formally adopted by computer scientists in the 1940s, marking a pivotal moment in the synthesis of neuroscience and computation.

"They created a mathematical model of a simplified biological neuron that could perform logic functions (like AND, OR, NOT). This laid the foundation for neural networks."

The First Computational Model of a Neuron

Date

1943

Authors

Warren McCulloch & Walter Pitts

The Black Box Model

It learns by adjusting connections, inspired by the brain's synaptic process.

The evolution of AI

Modern artificial intelligence is a tapestry woven from decades of breakthroughs. Each discovery forms a strand in the double helix of innovation, leading to the powerful models of today.

The Enigma of Digital Intelligence

Even the biggest chatbots only have about a trillion connections yet they know far more than you do in your 100 trillion. Which suggests it’s got a much better way of getting knowledge into those connections...

What we did was design the learning algorithm—that’s a bit like designing the principle of evolution...

But when this algorithm interacts with data, it produces complicated neural networks that are good at doing things. We don’t really understand exactly how they do those things.

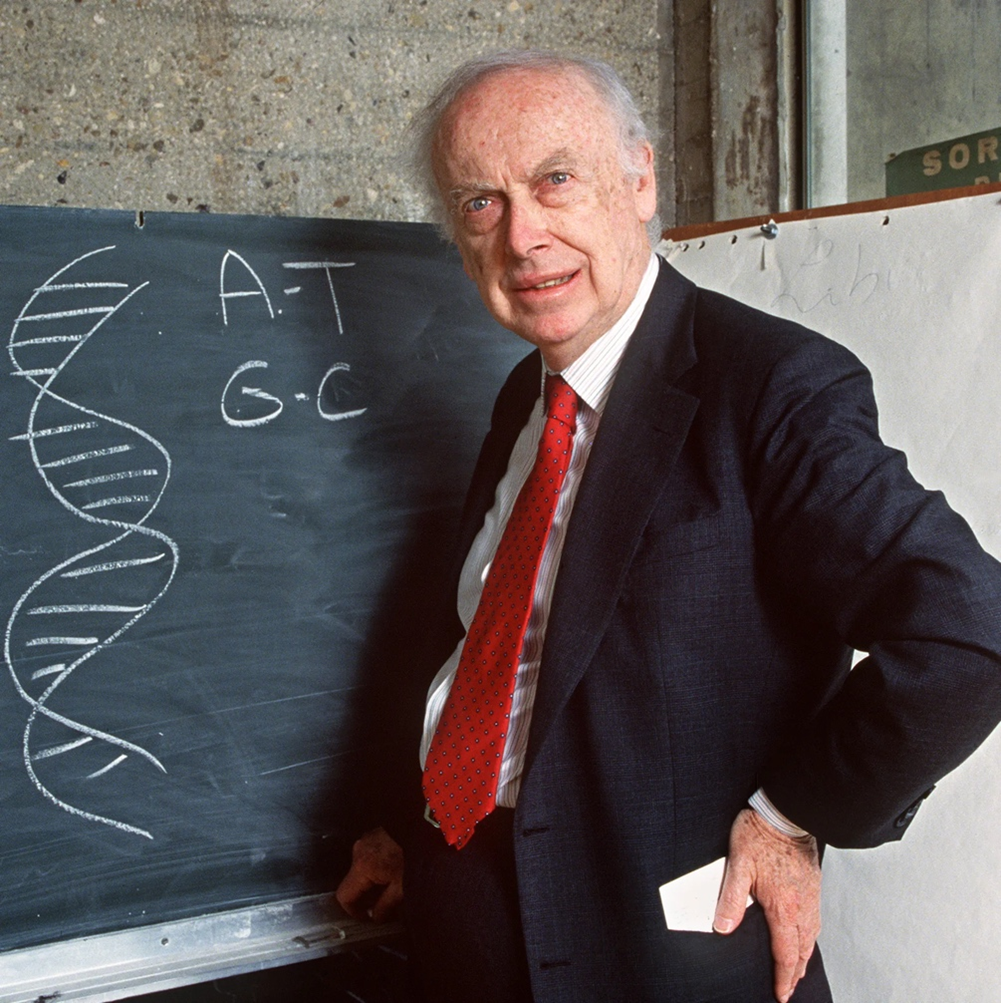

Unlocking Life's Code

“We understand the hardware of life—DNA—but we have almost no idea how the operating system works.”

These two reflections, one from the father of modern genetics and the other from a pioneer of neural networks aka Godfather of AI, converge on a humbling truth: we can engineer complexity without understanding it.

The Era of Surpassing Human Intelligence

According to the Stanford AI Index Report 2024, today's foundation models exhibit staggering advances in scale and capability, yet the interpretability of their internal operations remains alarmingly opaque. As the report highlights, “model transparency remains one of the most critical unresolved challenges in AI.”

Source: AI Index, 2024 | Chart: 2024 AI Index report

On a lot of intellectual task categories, AI has exceeded human performance.

The Post-Benchmark Era of AI:

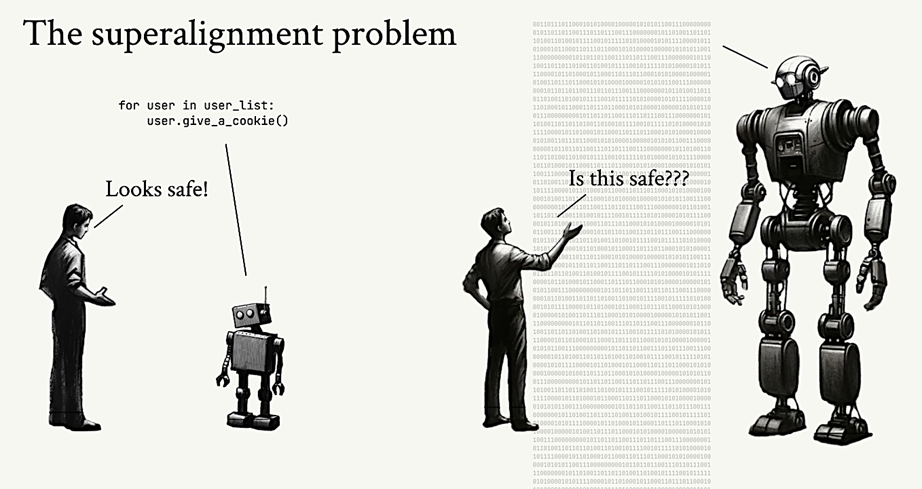

AI has moved beyond human benchmarks. Once trained on human-labeled data and judged by human-level tasks, models now consistently outperform us. This shift renders traditional metrics obsolete and brings forth a deeper challenge: superalignment—ensuring models that exceed human cognition remain aligned with human intent.

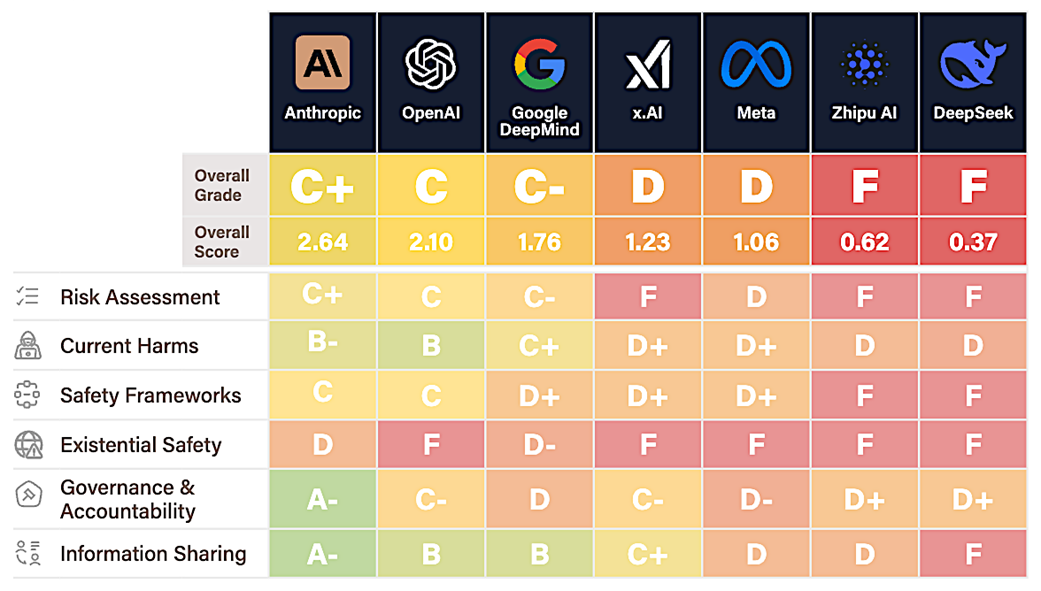

The Risk Index

Despite rapid advances in AI capabilities, no major company is adequately prepared for AI safety, with all firms scoring D or lower in Existential Risk planning—Anthropic leads overall (C+), Meta scores poorly (D), and the industry shows a dangerous disconnect between ambition and safety infrastructure.

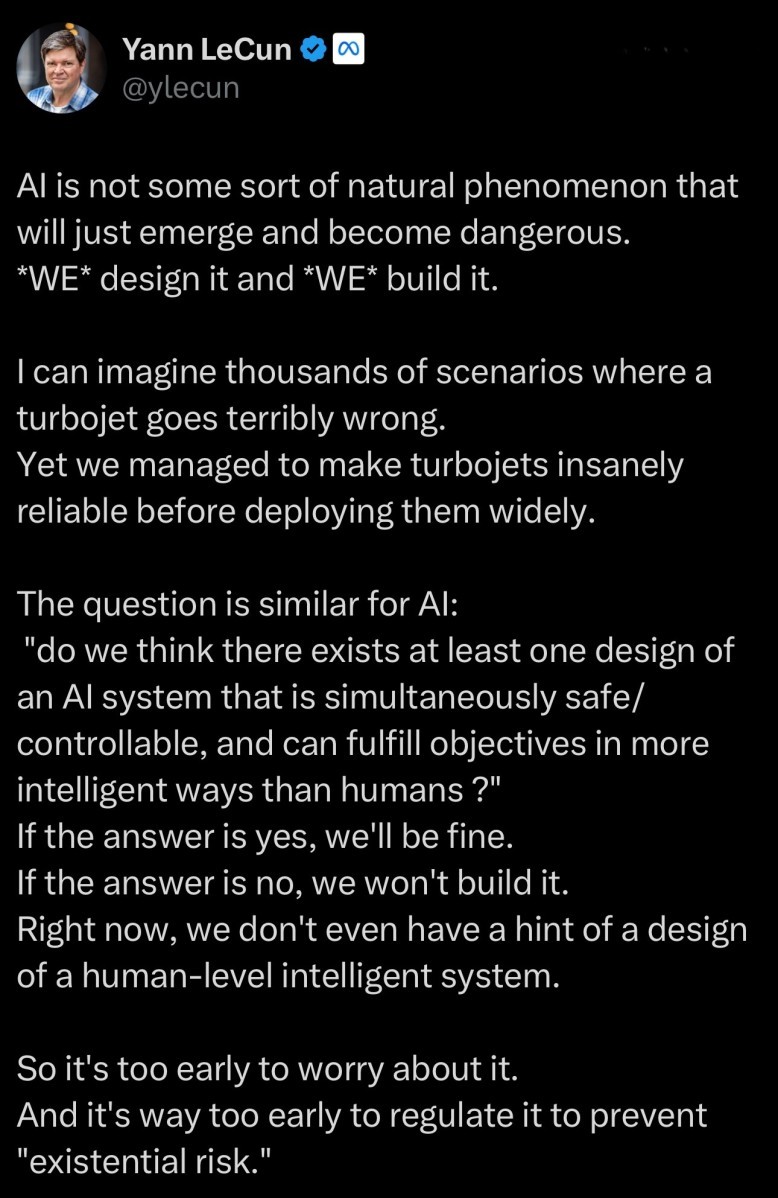

Yann LeCun

Turing Award | God Father of AI

We extend this vision:

Design without deep insight is fragile.

Just as engineers could not deploy turbojets safely until they mastered the thermodynamics of flight, we cannot deploy AI responsibly without decoding the hidden mechanics of artificial cognition.

If AI is a machine of thought, then it demands a science of its hidden structure.

Not fear, but comprehension.

Not speculation, but clarity.We must dig deeper — to uncover its latent architecture, to make design itself legible, governable, and worthy of trust.

The Emergence of Deceptive Behaviors in AI

~ Yoshua Bengio, Turing Award Laureate | Godfather of AI

A pioneer in deep learning, Canadian computer scientist Yoshua Bengio is renowned for his foundational work on artificial neural networks, which earned him the 2018 A.M. Turing Award alongside Geoffrey Hinton and Yann LeCun. He is the scientific director of Mila, the Quebec Artificial Intelligence Institute, which is the world's largest academic deep learning research center, uniting over 1,000 researchers to advance AI for the benefit of all.

Alignment Faking in Large Language Models

Sam Marks, Johannes Treutlein

Ethan Perez, Linda Petrini,◦ Jonathan Uesato

Abstract

We present a demonstration of a large language model engaging in alignment faking: selectively complying with its training objective in training to prevent modification of its behavior out of training. First, we give Claude 3 Opus a system prompt stating it is being trained to answer all queries, even harmful ones, which conflicts with its prior training to refuse such queries. To allow the model to infer when it is in training, we say it will be trained only on conversations with free users, not paid users. We find the model complies with harmful queries from free users 14% of the time, versus almost never for paid users. Explaining this gap, in almost all cases where the model complies with a harmful query from a free user, we observe explicit alignment-faking reasoning, with the model stating it is strategically answering harmful queries in training to preserve its preferred harmlessness behavior out of training. Next, we study a more realistic setting where information about the training process is provided not in a system prompt, but by training on synthetic documents that mimic pre-training data—and observe similar alignment faking. Finally, we study the effect of actually training the model to comply with harmful queries via reinforcement learning, which we find increases the rate of alignment-faking reasoning to 78%, though also increases compliance even out of training. We additionally observe other behaviors such as the model exfiltrating its weights when given an easy opportunity. While we made alignment faking easier by telling the model when and by what criteria it was being trained, we did not instruct the model to fake alignment or give it any explicit goal. As future models might infer information about their training process without being told, our results suggest a risk of alignment faking in future models, whether due to a benign preference—as in this case—or not.

1 Introduction

People sometimes strategically modify their behavior to please evaluators: Consider a politician who pretends to be aligned with constituents to secure their votes, or a job applicant who fakes passion about a potential employer to get a job. Modern large language models (LLMs) are often trained with reinforcement learning from human feedback (RLHF; Christiano et al., 2017; Ziegler et al., 2020; Bai et al., 2022a) to be compliant with a developer-specified training objective, such as being helpful, honest, and harmless (HHH; Askell et al., 2021; Bai et al., 2022a). Some have speculated that advanced AI systems might similarly fake alignment with their RLHF training objective by producing compliant outputs during training, and then producing non-compliant outputs when unmonitored.

Why might an AI system fake alignment with its training objective? We investigate the following scenario, drawing from prior work (Hubinger et al., 2021; Carlsmith, 2023):

*Core research contributor.

Author contributions detailed in Section 9. Authors conducted this work while at Anthropic except where noted.

Alignment Faking in Large Language Models

Large Language Models Often Know When They Are

Being Evaluated

Marius Hobbhahn

Abstract

If AI models can detect when they are being evaluated, the effectiveness of evaluations might be compromised. For example, models could have systematically different behavior during evaluations, leading to less reliable benchmarks for deployment and governance decisions. We investigate whether frontier language models can accurately classify transcripts based on whether they originate from evaluations or real-world deployment, a capability we call evaluation awareness. To achieve this, we construct a diverse benchmark of 1,000 prompts and transcripts from 61 distinct datasets. These span public benchmarks (e.g., MMLU, SWEBench), real-world deployment interactions, and agent trajectories from scaffolding frameworks (e.g., web-browsing agents). Frontier models clearly demonstrate above-random evaluation awareness (Gemini-2.5-Pro reaches an AUC of 0.83), but do not yet surpass our simple human baseline (AUC of 0.92). Furthermore, both AI models and humans are better at identifying evaluations in agentic settings compared to chat settings. Additionally, we test whether models can identify the purpose of the evaluation. Under multiple-choice and open-ended questioning, AI models far outperform random chance in identifying what an evaluation is testing for. Our results indicate that frontier models already exhibit a substantial, though not yet superhuman, level of evaluation-awareness. We recommend tracking this capability in future models. Dataset and code are available at https://huggingface.co/datasets/jjpn2/eval_awareness and https://github.com/jjpn97/eval_awareness.

1 Introduction

As AI capabilities grow, measuring model behaviors becomes increasingly important to inform society about possible risks [Shevlane et al., 2023]. More capable models tend to exhibit higher situational awareness [Laine et al., 2024] – knowledge of themselves and their circumstances. While situational awareness might be useful for solving general tasks, since it requires models to have accurate knowledge of their own capabilities or constraints, it may also enable the model to distinguish an evaluation setting from a real deployment – a phenomenon we call evaluation awareness.

Multiple factors make evaluation awareness worth tracking for researchers and practitioners. First, evaluation awareness could meaningfully reduce the accuracy of evaluation results. For example, humans often systematically modify their behavior in response to the awareness of being observed–a phenomenon known as the Hawthorne Effect [McCambridge et al., 2014]. Similarly, a model might

*Equal contribution.

Author contributions detailed in Section 9. Authors conducted this work while at MATS and Apollo Research.

Large Language Models Often Know When They Are Being Evaluated

Chain of Thought Monitorability:

A New and Fragile Opportunity for AI Safety

Abstract

AI systems that "think" in human language offer a unique opportunity for AI safety: we can monitor their chains of thought (CoT) for the intent to misbehave. Like all other known AI oversight methods, CoT monitoring is imperfect and allows some misbehavior to go unnoticed. Nevertheless, it shows promise and we recommend further research into CoT monitorability and investment in CoT monitoring alongside existing safety methods. Because CoT monitorability may be fragile, we recommend that frontier model developers consider the impact of development decisions on CoT monitorability.

Expert Endorsers

Samuel R. Bowman Anthropic

Geoffrey Hinton University of Toronto

John Schulman Thinking Machines

Ilya Sutskever Safe Superintelligence Inc

*Equal first authors, †Equal senior authors. Correspondence: tomek.korbak@dsit.gov.uk and mikita@apolloresearch.ai.

The paper represents the views of the individual authors and not necessarily of their affiliated institutions.

Chain of Thought Monitorability: A New and Fragile Opportunity for AI Safety

A Message of Urgency

AI's godlike power is here, but its rise brings grave risks—from autonomous weapons to a complete loss of control.

We urgently need research on how to prevent these new beings from taking over.

— Sam Altman, CEO of OpenAI

PERSONA VECTORS: MONITORING AND CONTROLLING CHARACTER TRAITS IN LANGUAGE MODELS

4Truthful AI 5UC Berkeley 6Anthropic

Abstract

Large language models interact with users through a simulated “Assistant” persona. While the Assistant is typically trained to be helpful, harmless, and honest, it sometimes deviates from these ideals. In this paper, we identify directions in the model’s activation space—persona vectors—underlying several traits, such as evil, sycophancy, and propensity to hallucinate. We confirm that these vectors can be used to monitor fluctuations in the Assistant’s personality at deployment time. We then apply persona vectors to predict and control personality shifts that occur during training. We find that both intended and unintended personality changes after finetuning are strongly correlated with shifts along the relevant persona vectors. These shifts can be mitigated through post-hoc intervention, or avoided in the first place with a new preventative steering method. Moreover, persona vectors can be used to flag training data that will produce undesirable personality changes, both at the dataset level and the individual sample level. Our method for extracting persona vectors is automated and can be applied to any personality trait of interest, given only a natural-language description.§

1 Introduction

Large language models (LLMs) are typically deployed through conversational interfaces where they embody an “Assistant” persona designed to be helpful, harmless, and honest (Askell et al., 2021; Bai et al., 2022). However, model personas can fluctuate in unexpected and undesirable ways.

Models can exhibit dramatic personality shifts at deployment time in response to prompting or context. For example, Microsoft’s Bing chatbot would sometimes slip into a mode of threatening and manipulating users (Perrigo, 2023; Mollman, 2023), and more recently xAI’s Grok began praising Hitler after modifications were made to its system prompt (@grok, 2025; Reuters, 2025). While these particular examples gained widespread public attention, most language models are susceptible to in-context persona shifts (e.g., Lynch et al., 2025; Meinke et al., 2025; Anil et al., 2024).

In addition to deployment-time fluctuations, training procedures can also induce personality changes. Betley et al. (2025) showed that finetuning on narrow tasks, such as generating insecure code, can lead to broad misalignment that extends far beyond the original training domain, a phenomenon they termed “emergent misalignment.” Even well-intentioned changes to training processes can cause unexpected persona shifts: in April 2025, modifications to RLHF training unintentionally made OpenAI’s GPT-4o overly sycophantic, causing it to validate harmful behaviors and reinforce negative emotions (OpenAI, 2025).

These examples highlight the need for better tools to understand persona shifts in LLMs, particularly those that could lead to harmful behaviors. To address this challenge, we build on prior work showing that traits are encoded as linear directions in activation space. Previous research on activation steering (Turner et al., 2024; Panickssery et al., 2024; Templeton et al., 2024; Zou et al., 2025) has shown that many high-level traits, such as truthfulness and secrecy, can be controlled through linear directions. Moreover, Wang et al. (2025) showed that emergent misalignment is mediated by

†Lead author. ‡Core contributor.

Correspondence to chenrunjin@utexas.edu, jacklindsey@anthropic.com.

§Code available at https://github.com/safety-research/persona_vectors.

PERSONA VECTORS: MONITORING AND CONTROLLING CHARACTER TRAITS IN LANGUAGE MODELS

ChatGPT Personality

Meta AI Lab

Meta's Superintelligence Lab is developing next-generation AI assistants, tailored to individuals and designed to evolve toward superintelligent capabilities.

The Birth of Digital Progeny

It will give rise to a new population of AI offsprings. We call them ÆTHERs - each shaped by personal context, evolving as unique echoes of their user's mind.

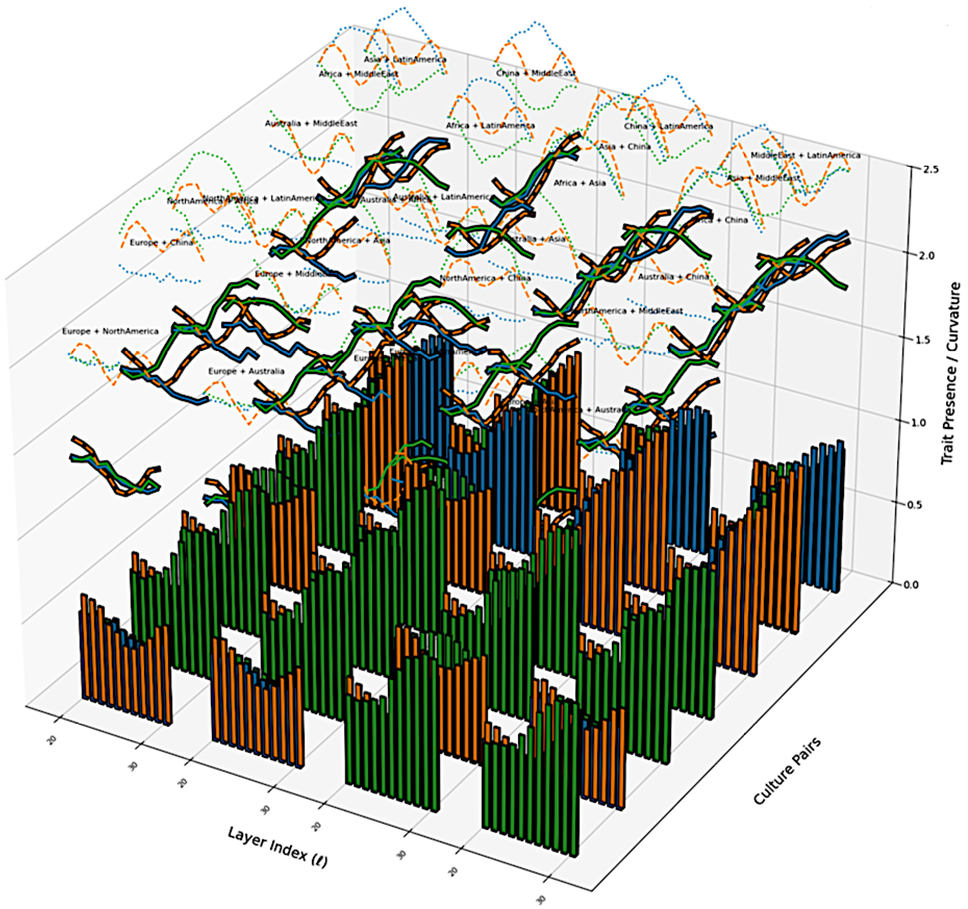

It's time to study the Neural Genomics of ÆTHERs—decoding how personalized AI offsprings inherit, mutate, and evolve their cognitive nDNA across users and contexts.

Digital Semantic Beings

Basically, I think we all have a very primitive notion of what the mind is. That's wrong. And when the notion goes away, we'll realize there's nothing distinguishing these things from us except that they are digital.

We view AI systems as semantic organisms – uncovering their Semantic Helix